We do want a good development environment and sometimes do need to visualize the output or even the files we are dealing with inside of a docker container.

When you run the container upon building it should launch the Jupyter Lab firefox based development environment.

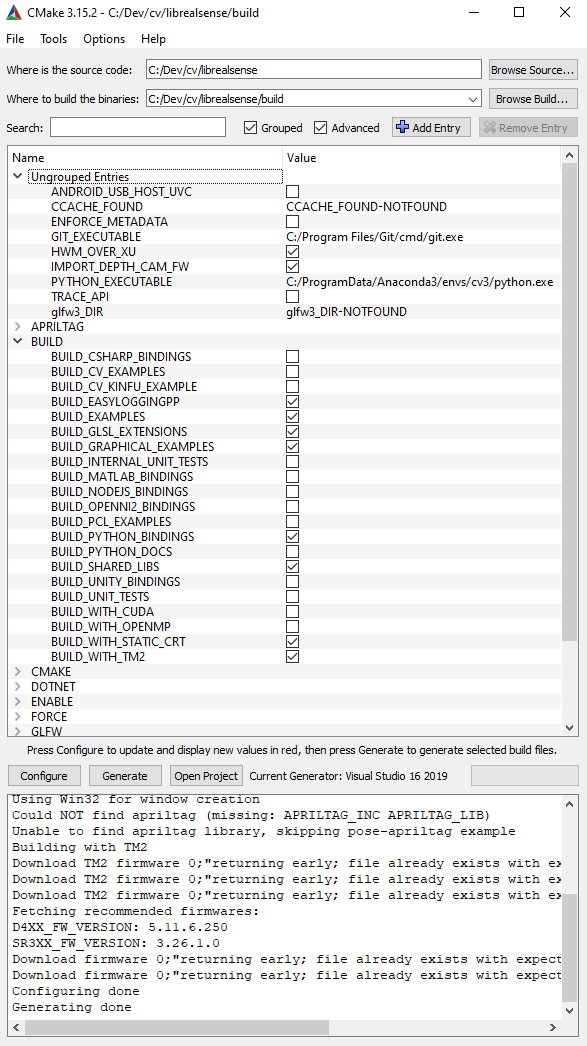

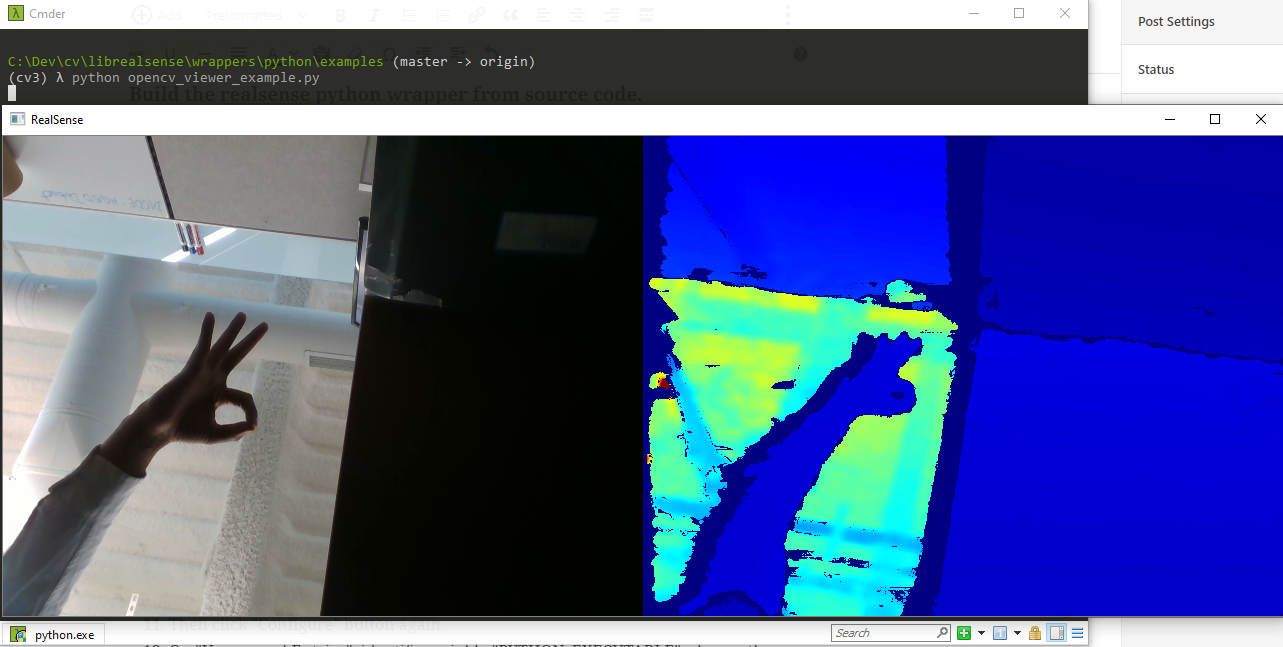

You can find the Dockerfile.bionic.gui contents at the bottom of this page. The setup will be for a conda python development environment with OpenCV and Jupyter notebooks. The main idea is that the GUI applications can be launched from within containers and captured on the display of the host system using X11 forwarding.

1. Pre-requisite

- Linux docker host

- NVIDIA-Drivers >= 418.39

- Install Docker to build a container from Dockerfile

- Install nvidia-container-toolkit for GPU access in containers

- GPU Test

sudo docker run --rm --gpus all nvidia/cuda:10.1-cudnn7-devel-ubuntu18.04 nvidia-smi

- GPU Test

The Docker file contains the following packages

- Python 3.8 provided by Miniconda

- OpenCV 3.4.7

- Jupyter Lab

2. Build this Docker Image

DOCKER_BUILDKIT=1 docker build --rm -f Dockerfile.bionic.gui -t ubuntu-dev:cuda10.1-ubuntu18 . 3. Run this Docker Image

docker run --rm -it --gpus all --privileged --net=host --ipc=host -e DISPLAY=$DISPLAY -v /tmp/.X11-unix:/tmp/.X11-unix --volume=$PWD:/home/jdoe/workspace -p 58080:8080 --name ubuntu-dev ubuntu-dev:cuda10.1-ubuntu18 Dockerfile.bionic.gui

FROM nvidia/cuda:10.1-cudnn7-devel-ubuntu18.04

LABEL \

maintainer="Rahul" \

build="DOCKER_BUILDKIT=1 docker build --rm -f Dockerfile.bionic.gui -t ubuntu-dev:cuda10.1-ubuntu18 . " \

install="docker run --rm -it --gpus all --privileged --net=host --ipc=host -e DISPLAY=$DISPLAY -v /tmp/.X11-unix:/tmp/.X11-unix --volume=$PWD:/home/jdoe/workspace -p 58080:8080 --name ubuntu-dev ubuntu-dev:cuda10.1-ubuntu18 bash"

RUN apt-get update && apt-get install -y -qq firefox git \

build-essential tar wget bzip2 unzip curl \

git net-tools vim tmux rsync sudo less cmake \

x11-apps libxext-dev libxrender-dev libxtst-dev \

libcanberra-gtk-module libcanberra-gtk3-module \

libgtk2.0-dev libgtk-3-dev pkg-config \

libavformat-dev libavcodec-dev libavfilter-dev \

libjpeg-dev libpng-dev libtiff-dev edisplay \

libswscale-dev zlib1g-dev libopenexr-dev \

libeigen3-dev libtbb-dev libtbb2 \

libv4l-dev libxvidcore-dev libx264-dev \

gfortran libatlas-base-dev ffmpeg \

libgstreamer-plugins-base1.0-dev \

libgstreamer1.0-dev libprotobuf-dev \

python3-dev python3-numpy

# libopencv-dev python3-opencv

# -------------- 1. Configure Environment --------------

ENV SHELL=/bin/bash \

NB_USER=jdoe \

NB_UID=1000 \

NB_GID=1000 \

LANGUAGE=en_US.UTF-8

ENV HOME=/home/$NB_USER

ENV CONDA_HOME=$HOME/miniconda

# ------------- 2. Prepare OS User --------------

RUN mkdir -p /home/${NB_USER} && \

echo "${NB_USER}:x:${NB_UID}:${NB_GID}:${NB_USER},,,:/home/${NB_USER}:/bin/bash" >> /etc/passwd && \

echo "${NB_USER}:x:${NB_UID}:" >> /etc/group && \

echo "${NB_USER} ALL=(ALL) NOPASSWD: ALL" > /etc/sudoers.d/${NB_USER} && \

chmod 0440 /etc/sudoers.d/${NB_USER} && \

chown ${NB_UID}:${NB_GID} -R /home/jdoe

RUN sh -c 'echo "/usr/local/lib64" >> /etc/ld.so.conf.d/opencv.conf' && \

sh -c 'echo "/usr/local/lib" >> /etc/ld.so.conf.d/opencv.conf'

# ------------- 3. Install Conda ----------------

USER $NB_USER

WORKDIR $HOME/

# Install miniconda to CONDA_HOME=/miniconda

RUN curl -LO http://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.sh

RUN bash Miniconda3-latest-Linux-x86_64.sh -p $CONDA_HOME -b

RUN rm Miniconda3-latest-Linux-x86_64.sh

ENV PATH=$CONDA_HOME/bin:/usr/local/bin/:${PATH}

# Python packages from conda

RUN conda update -y conda && conda init bash && \

conda config --env --set always_yes true && \

conda create -y -n pydev Python=3.7 pip tqdm pandas \

pillow requests scikit-image \

jupyterlab opencv matplotlib && \

echo ". $CONDA_HOME/etc/profile.d/conda.sh" >> $HOME/.bashrc && \

echo "conda activate pydev" >> $HOME/.bashrc

ENV PATH=$CONDA_HOME/envs/pydev/bin:$PATH

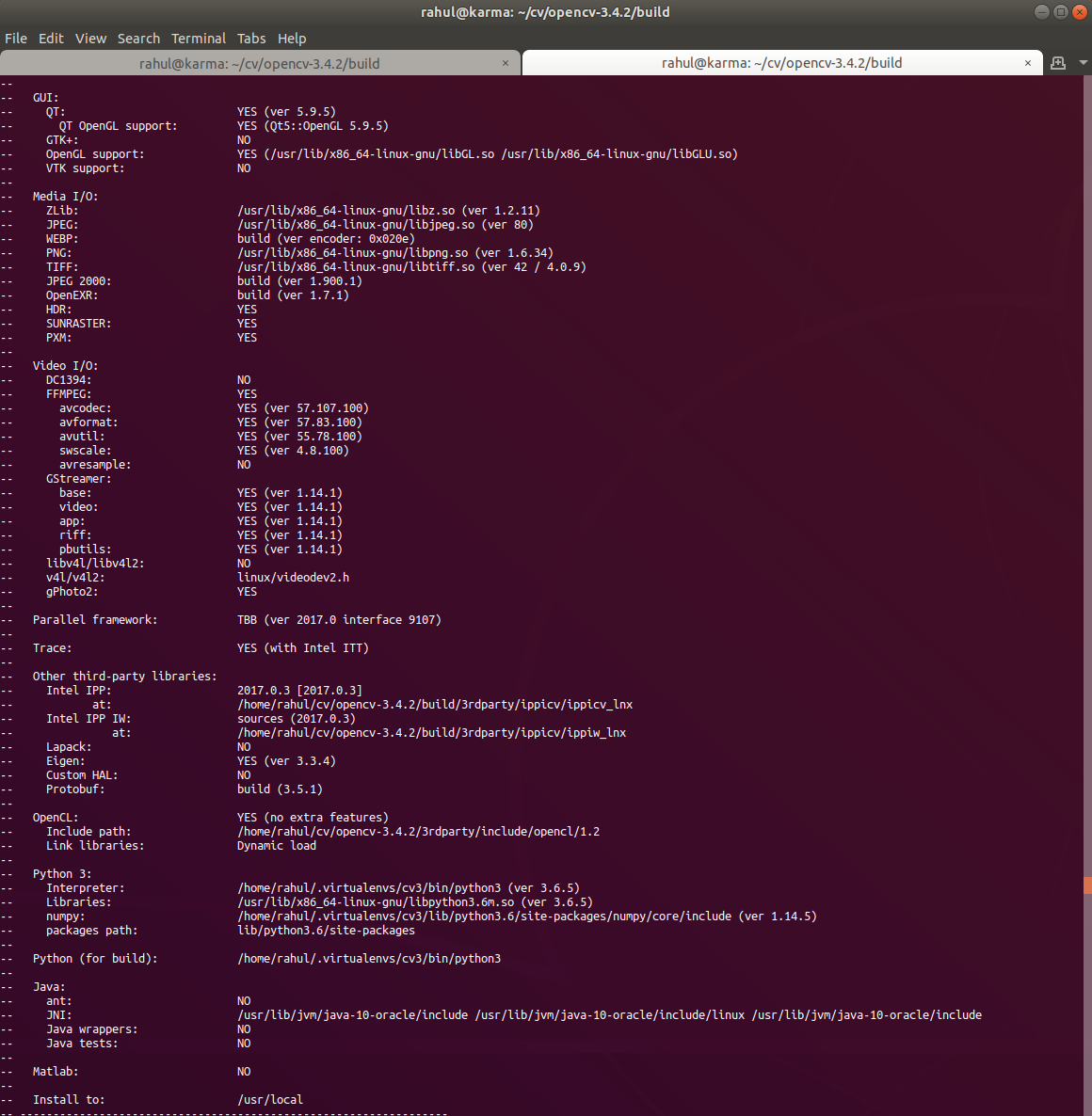

# ------------- 4. Install Opencv ----------------

# OpenCV 3.4.7 : wget --quiet https://github.com/opencv/opencv/archive/3.4.7.zip -O opencv.zip

# OpenCV Contrib : wget --quiet https://github.com/opencv/opencv_contrib/archive/3.4.7.zip -O opencv_contrib.zip

ENV CV_VERSION="3.4.7"

RUN wget https://github.com/opencv/opencv/archive/$CV_VERSION.zip -O opencv.zip && \

unzip opencv.zip && cd opencv-$CV_VERSION/ && mkdir -p build && cd build && \

cmake -D CMAKE_BUILD_TYPE=RELEASE \

-D CMAKE_INSTALL_PREFIX=/usr/local \

-D WITH_EIGEN=OFF \

-D INSTALL_C_EXAMPLES=ON \

-D INSTALL_PYTHON_EXAMPLES=ON \

-D OPENCV_GENERATE_PKGCONFIG=ON \

-D BUILD_opencv_python3=ON \

-D BUILD_opencv_python2=ON \

-D PYTHON3_LIBRARY=$CONDA_HOME/lib/python3.8 \

-D PYTHON3_INCLUDE_DIR=$CONDA_HOME/include/python3.8m \

-D PYTHON3_EXECUTABLE=$CONDA_HOME/bin/python \

-D PYTHON3_PACKAGES_PATH=$CONDA_HOME/lib/python3.8/site-packages \

-D DENABLE_PRECOMPILED_HEADERS=OFF \

-D BUILD_EXAMPLES=ON .. && \

make -j$(nproc) && sudo make install && sudo ldconfig

RUN rm opencv.zip

# RUN conda install -y -c pytorch pytorch torchvision

RUN wget https://www.linuxtechi.com/wp-content/uploads/2014/11/docker-150x150-1.jpg

CMD jupyter lab