Pre-requisite: CUDA should be installed on the machine with NVIDIA graphics card

CUDA Setup

Driver and CUDA toolkit is described in a previous blogpost.

With a slight change since the Tensorflow setup requires CUDA toolkit 9.0

# Clean CUDA 9.1 and install 9.0 $ sudo /usr/local/cuda/bin/uninstall_cuda_9.1.pl $ rm -rf /usr/local/cuda-9.1 $ sudo rm -rf /usr/local/cuda-9.1 $ sudo ./cuda_9.0.176_384.81_linux.run --override # Make sure environment variables are set for test $ source ~/.bashrc $ sudo ln -s /usr/bin/gcc-6 /usr/local/cuda/bin/gcc $ sudo ln -s /usr/bin/g++-6 /usr/local/cuda/bin/g++ $ cd ~/NVIDIA_CUDA-9.0_Samples/ $ make -j12 $ ./deviceQuery Test Successful

cuDNN Setup

Referenced from a medium blogpost.

The following steps are pretty much the same as the installation guide using .deb files (strange that the cuDNN guide is better than the CUDA one).

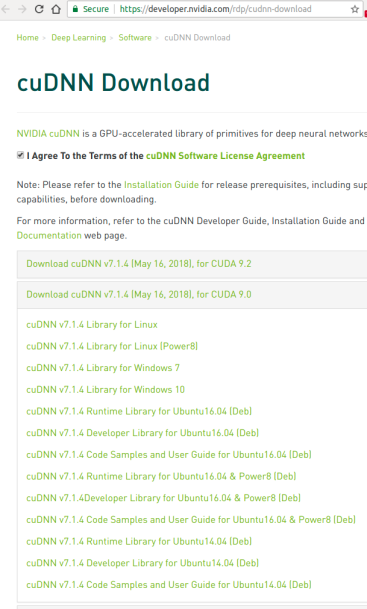

- Go to the cuDNN download page (need registration) and select the latest cuDNN 7.1.* version made for CUDA 9.0.

- Download all 3 .deb files: the runtime library, the developer library, and the code samples library for Ubuntu 16.04.

- In your download folder, install them in the same order:

# (the runtime library) $ sudo dpkg -i libcudnn7_7.1.4.18-1+cuda9.0_amd64.deb # (the developer library) $ sudo dpkg -i libcudnn7-dev_7.1.4.18-1+cuda9.0_amd64.deb # (the code samples) $ sudo dpkg -i libcudnn7-doc_7.1.4.18-1+cuda9.0_amd64.deb # remove $ sudo dpkg -r libcudnn7-doc libcudnn7-dev libcudnn7

Now, we can verify the cuDNN installation (below is just the official guide, which surprisingly works out of the box):

- Copy the code samples somewhere you have write access:

cp -r /usr/src/cudnn_samples_v7/ ~/ - Go to the MNIST example code:

cd ~/cudnn_samples_v7/mnistCUDNN. - Compile the MNIST example:

make clean && make -j4 - Run the MNIST example:

./mnistCUDNN. If your installation is successful, you should seeTest passed!at the end of the output.

(cv3) rahul@Windspect:~/cv/cudnn_samples_v7/mnistCUDNN$ ./mnistCUDNN

cudnnGetVersion() : 7104 , CUDNN_VERSION from cudnn.h : 7104 (7.1.4)

Host compiler version : GCC 5.4.0

There are 2 CUDA capable devices on your machine :

device 0 : sms 28 Capabilities 6.1, SmClock 1582.0 Mhz, MemSize (Mb) 11172, MemClock 5505.0 Mhz, Ecc=0, boardGroupID=0

device 1 : sms 28 Capabilities 6.1, SmClock 1582.0 Mhz, MemSize (Mb) 11163, MemClock 5505.0 Mhz, Ecc=0, boardGroupID=1

Using device 0

...

Result of classification: 1 3 5

Test passed!

In case of compilation error

Error

/usr/local/cuda/include/cuda_runtime_api.h:1683:101: error: use of enum ‘cudaDeviceP2PAttr’ without previous declaration

extern __host__ __cudart_builtin__ cudaError_t CUDARTAPI cudaDeviceGetP2PAttribute(int *value, enum cudaDeviceP2PAttr attr, int srcDevice, int dstDevice);

/usr/local/cuda/include/cuda_runtime_api.h:2930:102: error: use of enum ‘cudaFuncAttribute’ without previous declaration

extern __host__ __cudart_builtin__ cudaError_t CUDARTAPI cudaFuncSetAttribute(const void *func, enum cudaFuncAttribute attr, int value);

^

In file included from /usr/local/cuda/include/channel_descriptor.h:62:0,

from /usr/local/cuda/include/cuda_runtime.h:90,

from /usr/include/cudnn.h:64,

from mnistCUDNN.cpp:30:

Solution: sudo vim /usr/include/cudnn.h

replace the line '#include "driver_types.h"' with '#include <driver_types.h>'

Configure the CUDA & cuDNN Environment Variables

# cuDNN libraries are at /usr/local/cuda/extras/CUPTI/lib64

export PATH=/usr/local/cuda-9.0/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/usr/local/cuda-9.0/lib64

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/usr/local/cuda-9.0/lib

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/usr/local/cuda/extras/CUPTI/lib64

source ~/.bashrc

TensorFlow installation

The python environment is setup using a virtualenv located at /opt/pyenv/cv3

$ source /opt/pyenv/cv3/bin/activate $ pip install numpy scipy matplotlib $ pip install scikit-image scikit-learn ipython

Referenced from the official Tensorflow guide

$ pip install --upgrade tensorflow # for Python 2.7

$ pip3 install --upgrade tensorflow # for Python 3.n

$ pip install --upgrade tensorflow-gpu # for Python 2.7 and GPU

$ pip3 install --upgrade tensorflow-gpu=1.5 # for Python 3.n and GPU

# remove tensorflow

$ pip3 uninstall tensorflow-gpu

Now, run a test

(cv3) rahul@Windspect:~$ python

Python 3.5.2 (default, Nov 23 2017, 16:37:01)

[GCC 5.4.0 20160609] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

>>> hello = tf.constant('Hello, TensorFlow!')

>>> sess = tf.Session()

2018-08-14 18:03:45.024181: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: A VX2 FMA

2018-08-14 18:03:45.261898: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1405] Found device 0 with properties:

name: GeForce GTX 1080 Ti major: 6 minor: 1 memoryClockRate(GHz): 1.582

pciBusID: 0000:03:00.0

totalMemory: 10.91GiB freeMemory: 10.75GiB

2018-08-14 18:03:45.435881: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1405] Found device 1 with properties:

name: GeForce GTX 1080 Ti major: 6 minor: 1 memoryClockRate(GHz): 1.582

pciBusID: 0000:04:00.0

totalMemory: 10.90GiB freeMemory: 10.10GiB

2018-08-14 18:03:45.437318: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1484] Adding visible gpu devices: 0, 1

2018-08-14 18:03:46.100062: I tensorflow/core/common_runtime/gpu/gpu_device.cc:965] Device interconnect StreamExecutor with strength 1 edge matrix:

2018-08-14 18:03:46.100098: I tensorflow/core/common_runtime/gpu/gpu_device.cc:971] 0 1

2018-08-14 18:03:46.100108: I tensorflow/core/common_runtime/gpu/gpu_device.cc:984] 0: N Y

2018-08-14 18:03:46.100114: I tensorflow/core/common_runtime/gpu/gpu_device.cc:984] 1: Y N

2018-08-14 18:03:46.100718: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1097] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 1039 8 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1080 Ti, pci bus id: 0000:03:00.0, compute capability: 6.1)

2018-08-14 18:03:46.262683: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1097] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:1 with 9769 MB memory) -> physical GPU (device: 1, name: GeForce GTX 1080 Ti, pci bus id: 0000:04:00.0, compute capability: 6.1)

>>> print(sess.run(hello))

b'Hello, TensorFlow!'

Looks like it is able to discover and use the NVIDIA GPU

KERAS

Now add keras to the system

pip install pillow h5py keras autopep8

Edit configuration, vim ~/.keras/keras.json

{

"image_data_format": "channels_last",

"backend": "tensorflow",

"epsilon": 1e-07,

"floatx": "float32"

}

A test for keras would be like this at the python CLI,

(cv3) rahul@Windspect:~/workspace$ python Python 3.5.2 (default, Nov 23 2017, 16:37:01) [GCC 5.4.0 20160609] on linux >>> import keras Using TensorFlow backend. >>>

END.